All Categories

Featured

Table of Contents

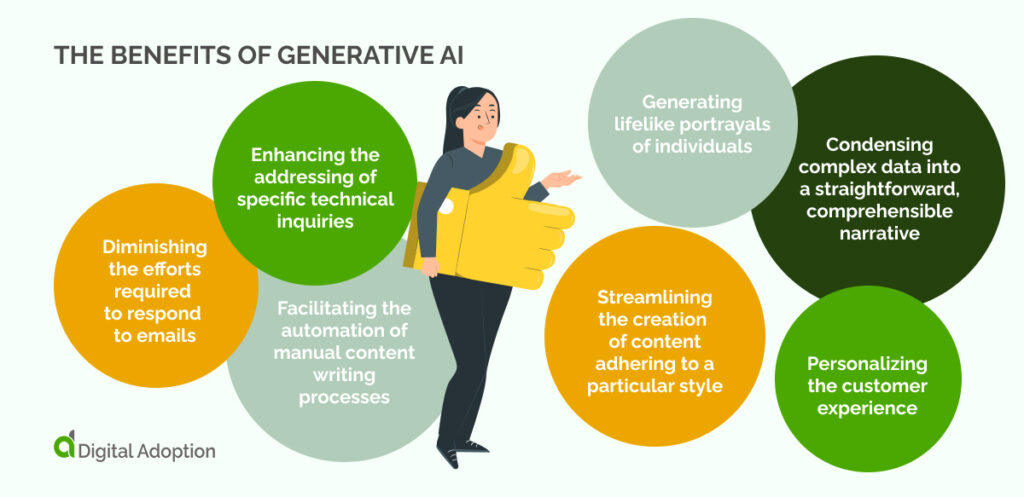

Generative AI has organization applications past those covered by discriminative models. Numerous formulas and related versions have been established and trained to develop brand-new, sensible content from existing information.

A generative adversarial network or GAN is a device discovering structure that puts both semantic networks generator and discriminator against each other, therefore the "adversarial" part. The contest in between them is a zero-sum video game, where one agent's gain is an additional agent's loss. GANs were created by Jan Goodfellow and his coworkers at the College of Montreal in 2014.

The closer the outcome to 0, the much more likely the result will be fake. The other way around, numbers closer to 1 reveal a greater probability of the forecast being real. Both a generator and a discriminator are often implemented as CNNs (Convolutional Neural Networks), especially when working with photos. So, the adversarial nature of GANs hinges on a video game theoretic situation in which the generator network need to compete versus the enemy.

Ai In Healthcare

Its foe, the discriminator network, attempts to compare examples attracted from the training information and those attracted from the generator. In this scenario, there's constantly a victor and a loser. Whichever network falls short is updated while its competitor stays unmodified. GANs will certainly be taken into consideration successful when a generator produces a fake sample that is so persuading that it can trick a discriminator and human beings.

Repeat. First explained in a 2017 Google paper, the transformer architecture is a machine discovering structure that is highly effective for NLP natural language processing tasks. It discovers to locate patterns in sequential data like composed text or spoken language. Based upon the context, the model can anticipate the next component of the collection, for instance, the following word in a sentence.

How Is Ai Revolutionizing Social Media?

A vector represents the semantic qualities of a word, with similar words having vectors that are enclose worth. The word crown could be stood for by the vector [ 3,103,35], while apple could be [6,7,17], and pear could appear like [6.5,6,18] Obviously, these vectors are just illustrative; the real ones have a lot more measurements.

At this stage, details regarding the position of each token within a sequence is added in the type of another vector, which is summed up with an input embedding. The result is a vector showing words's first definition and setting in the sentence. It's then fed to the transformer semantic network, which includes two blocks.

Mathematically, the connections in between words in an expression appear like ranges and angles between vectors in a multidimensional vector room. This device has the ability to detect refined ways also far-off data elements in a series impact and depend upon each other. For instance, in the sentences I poured water from the bottle right into the cup up until it was full and I poured water from the bottle right into the cup till it was empty, a self-attention device can distinguish the meaning of it: In the former situation, the pronoun describes the cup, in the last to the bottle.

is made use of at the end to compute the probability of various outputs and pick the most potential choice. The produced result is appended to the input, and the entire process repeats itself. Edge AI. The diffusion version is a generative version that creates new data, such as photos or noises, by resembling the information on which it was trained

Consider the diffusion model as an artist-restorer who examined paintings by old masters and currently can repaint their canvases in the very same design. The diffusion version does approximately the exact same point in three major stages.gradually introduces noise right into the initial picture up until the result is simply a chaotic set of pixels.

If we go back to our analogy of the artist-restorer, direct diffusion is taken care of by time, covering the paint with a network of cracks, dirt, and oil; occasionally, the painting is remodelled, adding particular information and getting rid of others. resembles examining a paint to realize the old master's initial intent. What are AI-powered chatbots?. The model meticulously evaluates exactly how the included noise alters the data

Cybersecurity Ai

This understanding enables the version to properly reverse the process in the future. After discovering, this model can reconstruct the distorted information using the process called. It begins with a noise example and eliminates the blurs action by stepthe exact same way our artist eliminates pollutants and later paint layering.

Consider latent depictions as the DNA of a microorganism. DNA holds the core guidelines required to build and keep a living being. Likewise, unexposed depictions include the basic components of data, enabling the model to restore the original info from this inscribed significance. However if you alter the DNA molecule simply a little, you get a totally various organism.

Ai For Mobile Apps

State, the girl in the 2nd top right image looks a little bit like Beyonc but, at the very same time, we can see that it's not the pop singer. As the name recommends, generative AI changes one kind of picture into one more. There is a selection of image-to-image translation variants. This task involves removing the design from a renowned paint and using it to an additional photo.

The result of utilizing Secure Diffusion on The results of all these programs are rather comparable. Some customers keep in mind that, on average, Midjourney draws a little extra expressively, and Stable Diffusion follows the demand more plainly at default setups. Scientists have actually also made use of GANs to produce manufactured speech from message input.

What Is The Role Of Data In Ai?

That stated, the music might change according to the ambience of the game scene or depending on the strength of the individual's workout in the fitness center. Review our post on to discover a lot more.

So, realistically, video clips can additionally be generated and transformed in similar way as images. While 2023 was marked by breakthroughs in LLMs and a boom in image generation modern technologies, 2024 has actually seen substantial developments in video generation. At the start of 2024, OpenAI presented an actually outstanding text-to-video design called Sora. Sora is a diffusion-based design that generates video from static noise.

NVIDIA's Interactive AI Rendered Virtual WorldSuch artificially created data can aid develop self-driving cars as they can make use of generated digital world training datasets for pedestrian discovery, for instance. Whatever the innovation, it can be utilized for both good and bad. Certainly, generative AI is no exemption. Presently, a number of obstacles exist.

When we claim this, we do not indicate that tomorrow, machines will certainly climb versus mankind and damage the globe. Allow's be sincere, we're quite great at it ourselves. Nevertheless, since generative AI can self-learn, its actions is hard to manage. The outputs offered can often be much from what you anticipate.

That's why so numerous are implementing dynamic and intelligent conversational AI designs that clients can connect with via message or speech. In enhancement to client service, AI chatbots can supplement advertising efforts and support inner communications.

Chatbot Technology

That's why so several are executing vibrant and intelligent conversational AI versions that consumers can engage with via text or speech. In addition to client solution, AI chatbots can supplement advertising and marketing efforts and assistance interior communications.

Latest Posts

What Are Ai-powered Chatbots?

Federated Learning

What Is Ai's Contribution To Renewable Energy?